Sigmoid and Tanh Activation Functions

Sigmoid and Tanh Activation Functions

This post will cover the sigmoid and hyperbolic tangent activation functions in detail. The applications, advantages and disadvantages of these two common activation functions will be covered, as well as finding their derivatives and visualizing each function.

- Review

- The Hyperbolic Tangent Function

- Derivative of Tanh

- The Sigmoid Function

- Derivative of the Sigmoid Function

- Plotting Activation Functions

Review

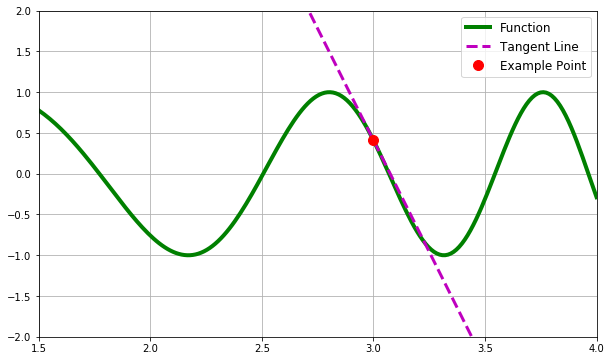

First off, a quick review of derivatives may be needed. A derivative is simply a fancy name for the slope. Everyone has heard of the slope of a function in middle school math class. When looking at any given point on a function’s graph, the derivative is the slope of the tangent line at that given point. Below is the code for a visual of this concept.

import numpy as np

import matplotlib.pyplot as plt

def f(x):

return np.sin(np.power(x, 2))

x = np.linspace(1.5,4,200)

y = f(x)

a = 3

h = 0.001 # h approaches zero

derivative = (f(a + h)- f(a))/h # difference quotient

tangent = f(a) + derivative * (x - a) # finding the tangent line

plt.figure(figsize=(10,6))

plt.ylim(-2,2)

plt.xlim(1.5, 4)

plt.plot(x, y, linewidth=4, color='g', label='Function')

plt.plot(x, tangent, color='m', linestyle='--', linewidth='3', label='Tangent Line')

plt.plot(a, f(a), 'o', color='r', markersize=10, label='Example Point')

plt.legend(fontsize=12)

plt.grid(True)

plt.show()

Everyone learns the formula for the slope of a line in middle school. The slope formula is commonly remembered as “rise over run”, or the change in y divided by the change in x. A derivative is the same thing, the change in y over the change in x, this can be written like this:

To find the derivative of a function, we can use the difference quotient. The difference quotient calculates the slope of the secant line through two points on a function’s graph. The two points would be x and (x + h). As the change in x approaches zero, the secant line will get increasingly closer to the tangent line, therefore becoming closer to the instantaneous rate of change, or the slope of a single point. The difference quotient is written like this:

Below is an example of how the difference quotient is used to find the derivative of a function. Note: “delta x” has been substituted with “h” for simplicity. The example function will be x2.

To find the derivative of x2, we would plug (x + h) into the function, then subtract f(x), which was given as x2. The limit of the difference quotient is the instantaneous rate of change. In the difference quotient, “h” will approach zero, but will never become zero. In the example given below, we assume that “h” approaches close enough to zero to be treated as if it were zero, and therefore we can get rid of the final “h” as it would be the equivalent to adding zero to 2x. The example below uses the difference quotient to show that the derivative of x2 is 2x.

The Hyperbolic Tangent Function

# EXAMPLE FUNCTION

def tanh(x, deriv = False):

if deriv == True:

return (1 - (tanh(x)**2)

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

# return np.tanh(x)

The hyperbolic tangent function is a nonlinear activation function commonly used in a lot of simpler neural network implementations. Nonlinear activation functions are typically preferred over linear activation functions because they can fit datasets better and are better at generalizing. The tanh function is typically a better choice than the sigmoid function (AKA logistic function) because while both share a sigmoidal curve or S-shaped curve, the sigmoid function ranges from [0 to 1], while the tanh function ranges from [-1 to 1]. This greater range allows the tanh function to map negative inputs to negative outputs, while near-zero inputs will be mapped to near-zero outputs. Additionally, the tanh function makes data more centered around zero, which creates stronger and more useful gradients. The hyperbolic tangent function is simply less restricted than the logistic function.

Similar to the tangent function (sine over cosine), the hyperbolic tangent function is equal to the hyperbolic sine over the hyperbolic cosine.

The hyperbolic sine and the hyperbolic cosine can be written like this:

We can write the hyperbolic sine over the hyperbolic cosine. This will create a fraction divided by another fraction. This is the same as the numerator fraction multiplied by the denominator fraction’s reciprocal. This is commonly remembered as “Keep, Change, Flip”. After multiplying the two fractions, we will be able to factor out a two from the top and bottom. This will leave us with the hyperbolic tangent function.

Derivative of Tanh

Now let’s find the derivative of the hyperbolic tangent function. We know that the hyperbolic tangent function is written as the hyperbolic sine over the hyperbolic cosine. When dealing with the derivative of a quotient of two functions, we can use the quotient rule. The quotient rule formula uses the function in the numerator as f(x) and the function in the denominator as g(x). The quotient rule formula is written as:

Using the quotient rule follows the steps below:

- Take the product of the function f(x) and the derivative of g(x).

- Take the product of the function g(x) and the derivatve of f(x).

- Take the difference between the first product and the second product.

- Divide by g(x) squared.

Now, if we apply the quotient rule to find the derivative of the hyperbolic tangent function, then it would look something like this:

After doing this, we run into another problem. What are the derivatives of the sinh and cosh functions? To solve this, we will first need to find the deriative of ex and e-x. Recall, the functions sinh(x) and cosh(x) contain ex and e-x. ex is known to be a very special function because its derivative is simply itself. Yes, the derivative of ex equals the function value for all possible points. Why is this true though?

Derivative of ex

Let’s start to take the derivative of ex. First, we will use the difference quotient and plug in ex for f(x).

Using the product rule of exponents, we can split up the e(x+h) and make things a bit easier to deal with. After doing this, we will be able to factor out an ex from the numerator and put it on the other side of the limit.

Now things may start to look weird. Where do we go from here? Are we stuck? Before we progress any further, let’s take a look at the definition of Euler’s number (e). Euler’s number can be defined as:

Here comes the really cool part. If we rewrite the definition of e in terms of h (plug in 1/h for “n”), it would look like this:

Looking back at where we last left off for the derivative of ex, we notice that we have an eh, so we can write our definition of “e” and raise it to the power of “h”.

Using the power rule, we can simplify this expression further. For reference, the power rule states that:

Now if we apply this to our definition of “e” raised to the power of “h”, all of the exponents would cancel each other out. Remember that anything raised to the power of one will always equal itself. This process will look like this:

Now that we know what eh equals, we can go back to finding the derivative of ex and rewrite it by replacing eh with its definition like so:

We can see that the limit is equal to one. h/h equals one, and as h approaches zero, it will always equal one. The derivative of ex becomes this:

Derivative of e-x

Finding the derivative of e-x will be much easier now that we know the derivative of ex. We could solve the derivative of e-x the same way that we solved the derivative of ex, but I want to show a different way where we use the quotient rule. First things first, we will need to rewrite e-x like this:

Using the quotient rule that was mentioned above, the derivative of e-x can be written like this:

Now we can divide by ex and get:

To finish everything, we can bring the ex back up into the numerator by making the exponent negative.

Now we know the derivatives of both ex and e-x. This means we can finally start to find the derivative of the hyperbolic sine and cosine functions.

Derivative of Tanh Continued

We are now fully equipped to find the derivatives of the hyperbolic sine and cosine functions. Since we know the derivatives of ex and e-x, this should be easy. The process of finding the derivative of sinh looks like this:

Next, the derivative of cosh can be found like this:

We can now go back to when we started to find the derivative of tanh and rewrite it to look this this:

After all of that work, we have finally found the derivative of the hyperbolic tangent function.

The Sigmoid Function

# EXAMPLE FUNCTION

def sigmoid(x, deriv = False):

if deriv == True:

return x * (1 - x)

return 1 / (1 + np.exp(-x))

The sigmoid function, also known as the logistic function, is often very helpful when predicting an output between 0 and 1, such as probabilities and binary classification problems. As mentioned earlier, the sigmoid function squeezes all values to be within a range of [0 to 1], this causes all negative inputs to be mapped at, or close to zero, which can cause some problems. The most commonly known issue with the sigmoid function is the vanishing gradient problem. The vanishing gradient problem is common amongst neural networks with many layers that utilize the sigmoid function. Because the sigmoid function’s derivatives are small, when they are multiplied during backpropagation they get smaller and smaller until eventually becoming useless. The smaller the gradients, the less effect the backpropagation is. Weights will not be updated at a useful rate, and the neural network will seem to be stuck. The sigmoid function is not as popular as it once was due to this problem, but for simple neural network architectures, it can still be a very fast and efficient activation function that gets the job done properly. The sigmoid function is written below:

Derivative of the Sigmoid Function

It is time to find the derivative of the sigmoid function. The sigmoid function will be denoted as S(x) (as shown above). Just as we did with the tanh function, we will use the quotient rule to find the derivative of the sigmoid function. As a reminder, the quotient rule is written below:

Now, we can plug our values into the quotient rule’s formula. This will look like this:

Now we can simplify and because anything multiplied by one is itself, we can simplify it like this:

To further progress through our derivation, we can add one and subtract one on the numerator. This will not change the function because one minus one is zero. We are essentially using a fancy method of adding zero to the numerator to help simplify. The above expression then becomes:

From here on out we just simplify the above expression to find the derivative of the sigmoid function.

If the sigmoid function is written as S(x), then the derivative of the sigmoid function is:

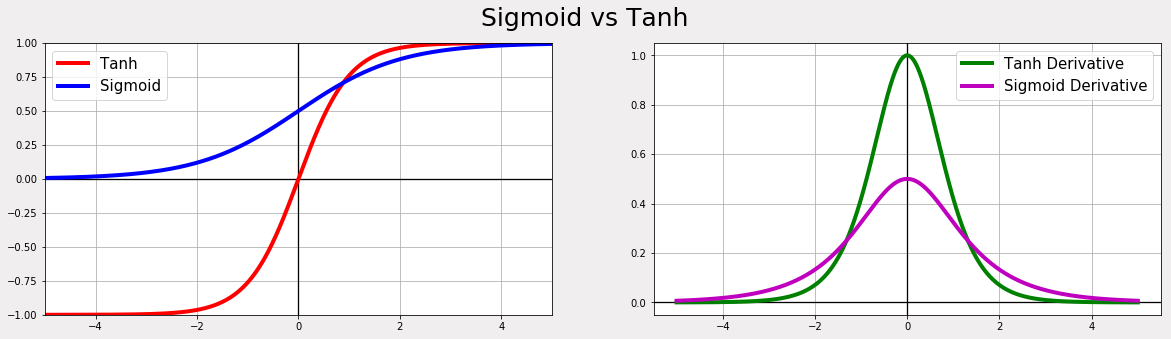

Plotting Activation Functions

Sometimes having a visual can make the process a bit more intuitive. Below will be a comparison of two activation functions: the sigmoid function (logistic function), and the hyperbolic tangent function. This visual comparison may help to understand the differences and similarities between the two activation functions. To create this visual, we will have to use Matplotlib, a useful python plotting library. We will create two subplots, one will compare the two activation functions (left) and the other will compare their derivatives (right).

The code for this visualization is below:

import matplotlib.pyplot as plt

fig, ax = plt.subplots(1, 2, figsize=(20,5), facecolor='#F0EEEE')

# Functions Axes Lines

ax[0].axvline(linewidth=1.3, color='k')

ax[0].axhline(linewidth=1.3, color='k')

# Derivatives Axes Lines

ax[1].axvline(linewidth=1.3, color='k')

ax[1].axhline(linewidth=1.3, color='k')

x = np.arange(-5,5,.0001)

# y = tanh, z = sigmoid

y = np.tanh(x)

z = 1 / (1 + np.exp(-x))

# Plot Activation Functions

ax[0].plot(x, y, linewidth=4, color='r', label='Tanh')

ax[0].plot(x, z, linewidth=4, color='b', label='Sigmoid')

# Derivative Equations

tanh_deriv = (1 - tanh(x)**2)

sig_deriv = np.exp(-x) / (1 + np.exp(-x)**2)

# Plot Derivatives

ax[1].plot(x, tanh_deriv,linewidth=4, color='g', label='Tanh Derivative')

ax[1].plot(x, sig_deriv,linewidth=4, color='m', label='Sigmoid Derivative')

# Main Title

fig.suptitle("Sigmoid vs Tanh", fontsize=25)

# Grid

ax[0].grid(True)

ax[1].grid(True)

# Legend

ax[1].legend(fontsize=15)

ax[0].legend(fontsize=15)

# First Graph Limits (looks prettier)

ax[0].set_xlim(-5, 5)

ax[0].set_ylim(-1, 1)

plt.show()

As mentioned earlier, the hyperbolic tangent function shares an S-shape curve with the sigmoid function. The left plot will show the ranges of the two activation functions, and will also show that the hyperbolic tangent function is centered around zero, while the sigmoid function is not. Notice that the sigmoid function cannot go into the negatives. This means that all negative inputs will be mapped to zero because the sigmoid function is not able to go any lower than zero. The right plot will show the stronger derivative that the hyperbolic tangent function has over the sigmoids less pronounced derivative.